Database Management Services, Oracle Database Management Solution, Oracle Exadata, Remote Database Management

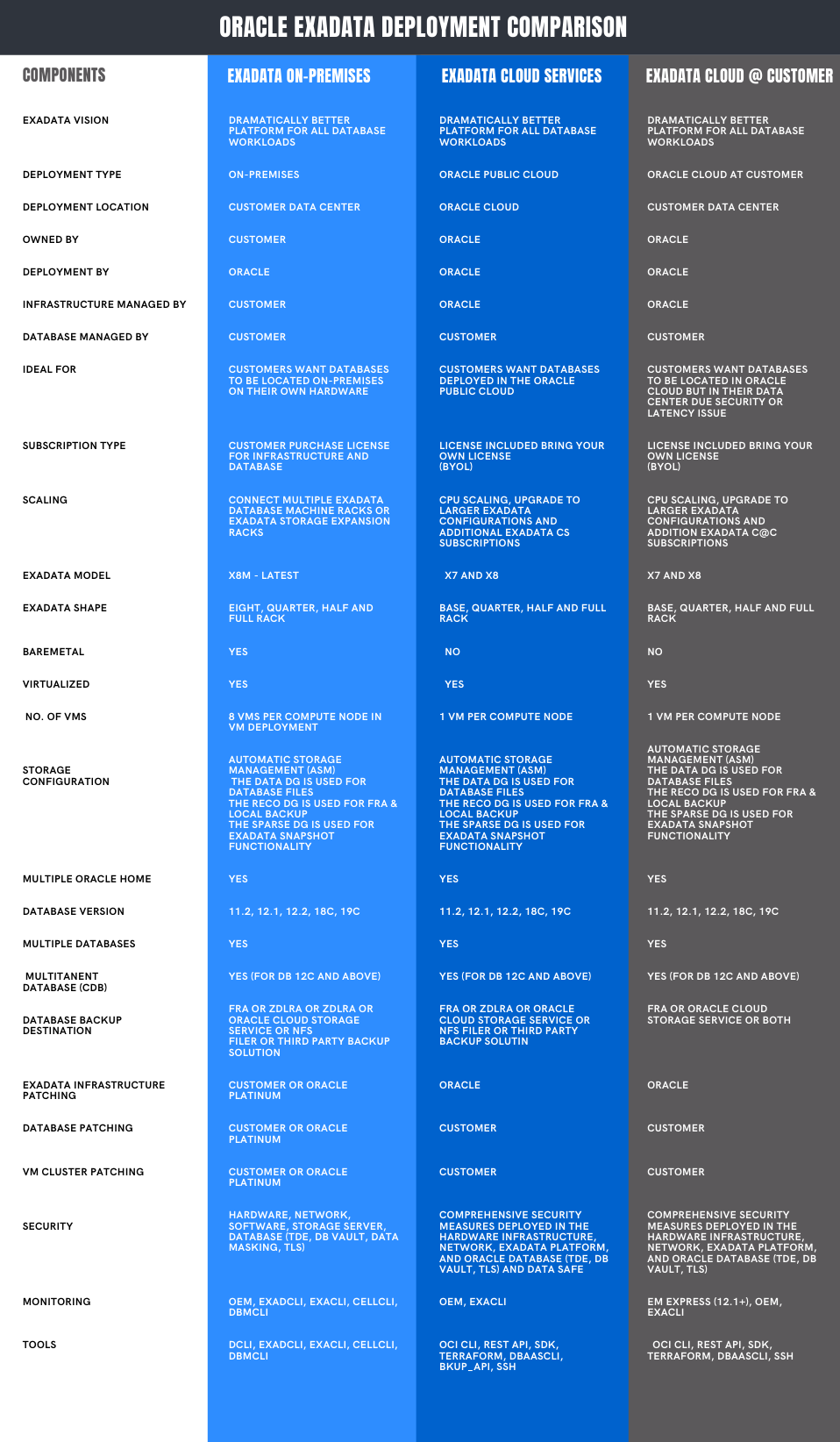

What are the different Exadata Deployment Option available?

– Exadata On-Premises

– Exadata Cloud Service

– Exadata Cloud at Customer

What is Oracle Exadata Database Machine?

Exadata Database Machine is an Engineered System which consists of Compute nodes, Storage cells and Infiniband Switches or RoCE Switches (starting X8M).

Exadata Database Machine or simply known as Exadata is:

- – An Engineered System

– A preconfigured combination of balanced Hardware and unique software

– A unique platform for running Oracle Databases

– Consists of Compute Grid, Storage Grid and Network Grid

– A fully integrated platform for Oracle Database

– Ideal for Database Consolidation platform

– It provides High Availability and High Performance for all types of Workloads

The Oracle Exadata Database Machine is an Engineered System designed to deliver extreme performance and high availability for all type of Oracle database workloads (OLTP, OLAP & Mixed Workload).

Exadata Database Machine Components

- 1. Compute nodes (Database Server Grid)

- 2. Exadata Storage Server (Storage Server Grid)

- 3. Network (Network Grid)

-

- – Exadata Infiniband switches

- – Exadata RoCE switches – From Exadata X8M

- 4. Other Components

-

- – Cisco Switch, PDUs

Oracle Exadata Cloud Service

Oracle Database Exadata Cloud Service delivers the world’s most advanced database cloud by combining the world’s #1 database technology and Exadata, the most powerful database platform, with the simplicity, agility and elasticity of a cloud-based deployment.

Oracle Exadata Cloud @ Customer

Exadata C@C is ideal for customers desiring cloud benefits but cannot move their databases to the public cloud due to sovereignty laws, industry regulations, corporate policies, security requirements, network latency, or organizations that find it impractical to move databases away from other tightly coupled on-premises IT infrastructure. Oracle Exadata C@C delivers the world’s most advanced database cloud to customers who require their databases to be located on-premises. It is identical to Oracle’s Exadata Cloud Service but located in customers’ own data centers and managed by Oracle.

Oracle Exadata Deployment Comparison

Let’s compare each Exadata deployment to learn about them in detail so we can choose the right deployment option for our Business need.